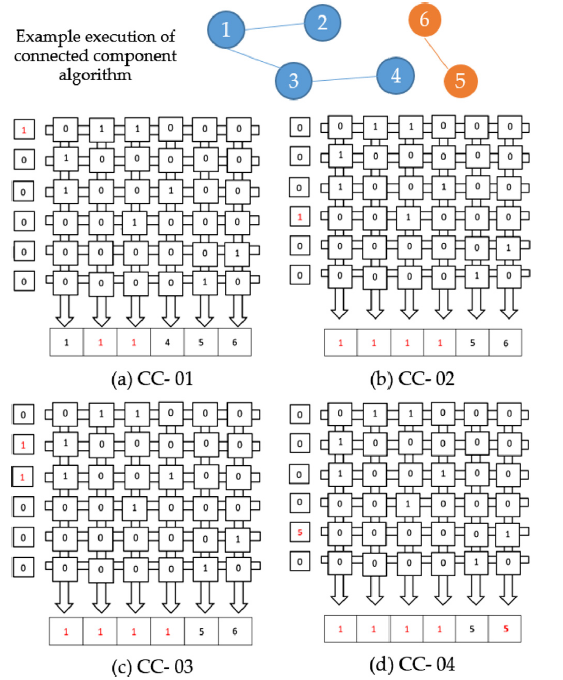

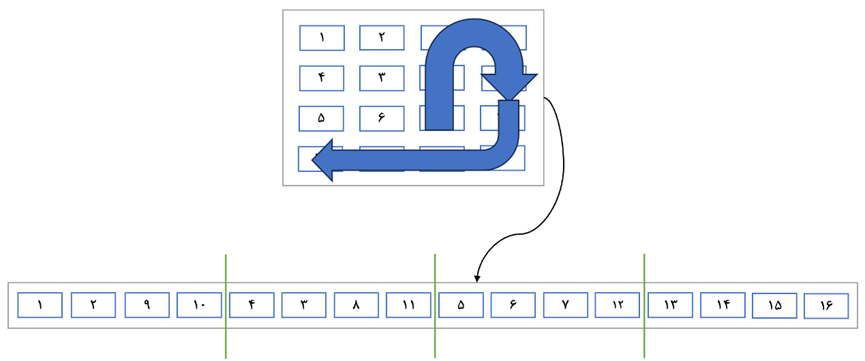

Deep neural networks nowadays require massive data processing to solve complex problems. The process of processing such programs faces limitations in the Von-Neumann architecture. The Von-Neumann architecture incurs significant costs in transferring data to the processing unit, including energy consumption, system resource utilization, and program execution delays. To address these limitations and reduce costs, accelerators with different architectures have been introduced. These accelerators leverage emerging memory technologies, enabling processing within the memory itself. Several in-memory processing accelerators have been developed to speed up the execution of deep neural networks. These accelerators utilize resistive memory for dot product operations. Currently, proposed architectures improve system performance by adding hardware components. In this research, the goal is to enhance the performance of in-memory processing systems for recurrent neural networks without the need to add hardware elements. For this purpose, an algorithm is proposed that aims to optimally distribute the data of each layer of the neural network in tiles, allowing faster access for each layer to the data of the preceding layer. The proposed algorithm is applied to the ISAAC architecture and in-memory processing accelerator with resistive memory for recurrent neural networks. Evaluation results demonstrate that this algorithm reduces the inter-tile communication latency by approximately 36% and 48% compared to the existing latency in the best-case scenario for data access in ISAAC architectures and resistive memory-based in-memory processing for recurrent neural networks, respectively.

Latest Posts

-

Oct 25, 2024

-

Oct 25, 2024